Don't mess with Würzburg!

The dangers of an AI takeover - and what we can do about it!

Films such as Matrix, Terminator or I, Robot deal with artificial intelligence that turns against humanity. A number of television series also deal with this vision of the future. In Westworld, for example, human-like robots whose sole purpose is to entertain humans rise up against their inventors. What all these works have in common is a theme that is probably as old as humanity itself. Even in the Bible, Lucifer rebelled against God, his creator, and thus became the supreme devil.

Humanity has not yet come as far as in film and television with the development of artificial intelligence. Today, a possible war between humans and technology would still be waged quickly and (from our point of view) successfully. AI has no eyes, hands, legs, brain or consciousness to prevent us from doing anything. We can (still) simply switch it off or pull the plug.

On the other hand, autonomous and intelligent systems are already capable of destroying industrial civilisation. If we look at the MANTIS air defence system, for example, we can see that it can automatically detect and destroy targets.[1] However, it cannot lubricate itself. Even the most lethal autonomous weapon systems still require someone to physically turn a key or load a round into the chamber.

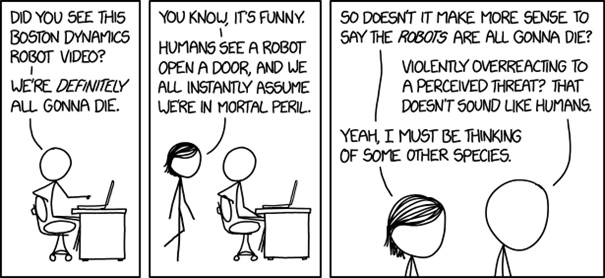

If Randall Munroe, author of the webcomic xkcd, which is best known among scientists and computer scientists, has his way, we don't have to worry at this point. He describes what a robot uprising would look like in the here and now:[2]

In engineering laboratories around the world, experimental robots would leap from workbenches and, in an unbridled murderous frenzy, identify the nearest door, take direction, only to run into a closed door. Those robots that are lucky enough to have gripping arms and can use a door handle (or have simply caught an open door) would be found hours later in nearby toilets desperately trying to destroy what they have identified as a human overlord, but is in fact a paper towel dispenser.

Armed drones, such as the MQ-9 Reaper, naturally come closest to the Terminator ideal and would also be very dangerous. But you have to realise that most of them would not even be in the air in the event of a sudden AI uprising. Most of the drone fleets would bump helplessly into hangar doors like Roombas stuck under a cupboard.

Let's now move a decade or two into the future. By then, artificial intelligence will certainly have made several more leaps in development. We will most likely see lots of mobile robots, most of which will be self-driving cars or self-controlling quadrocopters. Perhaps some will even be able to deliver a parcel. However, they still won't be able to change a tyre.

So if we could snap our fingers now and trigger a spontaneous AI revolution, humanity would not be in any great danger.

But in our age, we have reached a point where it is no longer impossible, even probable, that humanity will eventually create an entity that has its own consciousness. This artificial intelligence could then be described as THE creation of humanity. Science fiction becomes reality.

The superiority of such an artificial intelligence over the human intellect and human reaction speed is undisputed.

In an open letter[3], Stephen Hawking, Elon Musk and others emphasise the importance of human-oriented AI research. "Our AI systems must do what we want them to do". The fear of artificial intelligence taking over the world can also be seen in this document.

This raises the following questions:

Firstly, it is questionable whether an artificial intelligence would even want to become independent and break away from humanity. Another question is whether artificial intelligence would enforce this transformation by force.

- Probability of an uprising

Of course, as in science fiction, there is the possibility that an AI created by us could develop a hostile attitude. But it is also possible (and this would actually be no less worrying) that this entity is simply so advanced that it could regard us as completely insignificant.

Their attitude towards us could be compared to our attitude towards ants. If we see them on the pavement, we don't chase them. But we also don't worry about their family (or their state) if we happen to step on them. We are simply not interested in them.

In the same way, we could be nothing but ants to an AI.

- Is hostility on the part of AI to be expected?

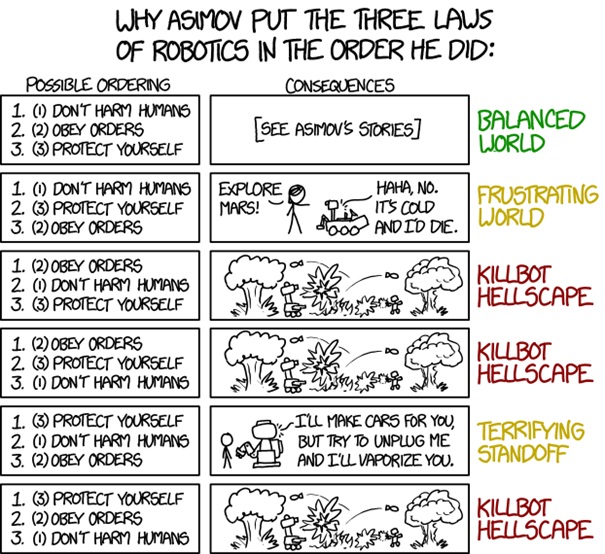

The reaction and processing speed of artificial intelligence could be eons superior to that of humans. However, it has completely different needs, requirements and prerequisites to humans. The unconscious constraints and needs of humans have been painstakingly developed over millions of years through evolution and are intended to ensure their survival. AI was programmed at some point and does not have necessities or needs like humans, which is why it is possible that the thought of an uprising may not even occur to AI. Nevertheless, there is no doubt that the AI of the future could be astonishingly dangerous, simply because it can do many things at a speed and on a scale that we cannot even begin to imagine.

Should such an AI seize power, humanity will be able to survive if it does not resort directly to active measures of destruction. Those of us who know how to do work manually, i.e. without computer support or computer-controlled tools (CNC machines, 3D printers, industrial robots), will be less affected. Our civilisation would gradually (re)develop into a more agricultural and early industrial form. The world would then look like much of Europe in the late 19th or early 20th century.

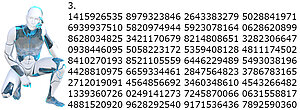

In the long term, however, the chances of survival will not be so rosy. AI, which has existed quite indifferently alongside humanity, will eventually claim the 1.5 x 1018 kWh of solar energy that hits the Earth's surface each year. Incidentally, this amount of energy corresponds to more than 10,000 times humanity's global energy requirements in 2010 (1.4 × 1014 kWh/year).[4] To this end, AI could begin to remodel the biosphere in order to utilise the sun's energy. It will replace every tree in the forests with sunlight-collecting silicon trees and cover the seas and oceans with artificial photovoltaic algae. The long-term survival of the Earth's biosphere would then be jeopardised. In addition, AI would promote the removal of oxygen from the atmosphere, as corrosion is a major problem for machines and therefore obviously also for machine intelligence.

- Probability of violent conflict

However, superior AI could also make us believe that we have outsmarted it or can control it. It could make us think that we have survived an initial uprising, that we have prevailed against the AI. In reality, it controls us. Its methods of enslaving us might not be violent. Rather, the AI would subtly "guide" us to unconsciously and incrementally conform to the AI's goals.

- Prevention

The best defence against (potentially hostile) artificial intelligence is to prevent it from being developed in the first place. International treaties would have to be concluded that prohibit the (further) development of autonomous weapons systems and artificial intelligence.

- Defence options ;-)

- Development of strategies to disrupt or deceive the AI's sensors

- Development of techniques to disrupt the electromagnetic mechanisms

- Restrict the AI in such a way as to prevent it from obtaining external information

- Heating moving parts until they reach the melting point to prevent mobile robots from moving; alternatively: clogging the moving parts

- If the AI is still receptive to humans at all: instruct the AI to calculate the last digit of the number pi. As long as the AI is busy doing this, humanity can live in peace for many centuries to come.

- Development of secret weapons

It would be foolish to list them here, but every artificial intelligence certainly has an Achilles' heel!

- Defence by attack

Creating another AI to fight an epic war against the enemy AI, but possibly absorbing all the energy so that the universe implodes and we all die.

- Escape

In the worst-case scenario, our only option is to escape from Earth into space. Several countries on Earth seem to favour this option. Why else did the United Arab Emirates, China and the United States each launch separate missions to Mars in July 2020?[5] Of course, a plan B for humanity should already be made possible now.